At the core of Natural Language Processing lies extensive language data. The availability of copious amounts of text or speech, faithfully representing natural language, is pivotal for the efficacy of artificial language systems. Diverse forms of text, from Wikipedia articles to Twitter threads, find application in various language models. These substantial language datasets collectively constitute what is known as a corpus in linguistics—an extensive and principled collection of authentic, naturally occurring language.

Despite gaining increased attention with the advent of LLMs, the concept of a corpus is not new in linguistics, dating back to the early 20th century or earlier. During that time, lexicographers manually compiled substantial language samples for dictionary construction, representing a rudimentary form of a corpus. Beyond lexicographers and grammarians, linguists manually compiled corpora for various purposes, such as language pedagogy or the study of language acquisition in children. Moreover, the so-called “structural” linguists, who dominated the field until the 1950s, emphasized the utility of corpora, believing in the analytical approach to the distributional characteristics of language. With this idea, linguist Charles C. Fries, for example, manually gathered telephone conversations from approximately 300 speakers, encompassing 250,000 words. Field linguist Franz Boas dedicated himself to establishing a substantial corpus of texts from Native Americans.

In the 1960s, however, the first electronic corpus appeared. W. Nelson Francis and Henry Kučera, based at Brown University, crafted a corpus of 1 million American English words, famously known as the Brown corpus. Thereafter, linguistic corpora rapidly evolved and diversified in terms of size and scope, driven by advancements in computer hardware. For instance, the Corpus of Contemporary American English (COCA), currently one of the most widely used American English corpora, encompasses over one billion words sourced from eight different genres.

Types of Corpora and their use

Corpora can be broadly categorized into two types: generalized and specialized. A generalized corpus aims to represent all aspects of a language and typically consists of a large number of words and/or documents. Examples of generalized corpora include the British National Corpus and COCA, mentioned earlier. On the other hand, a specialized corpus is constructed to represent specific aspects of a language, such as language produced by particular user groups (e.g., children, teenagers, second language learners, aphasics) or in specific settings (e.g., university, business). The Child Language Data Exchange System (CHILDES), a large corpus of language produced by developing children, and the Professional English Research Consortium (PERC) corpus, which contains English academic journal texts in science, engineering, technology, and other fields, are examples of specialized corpora.

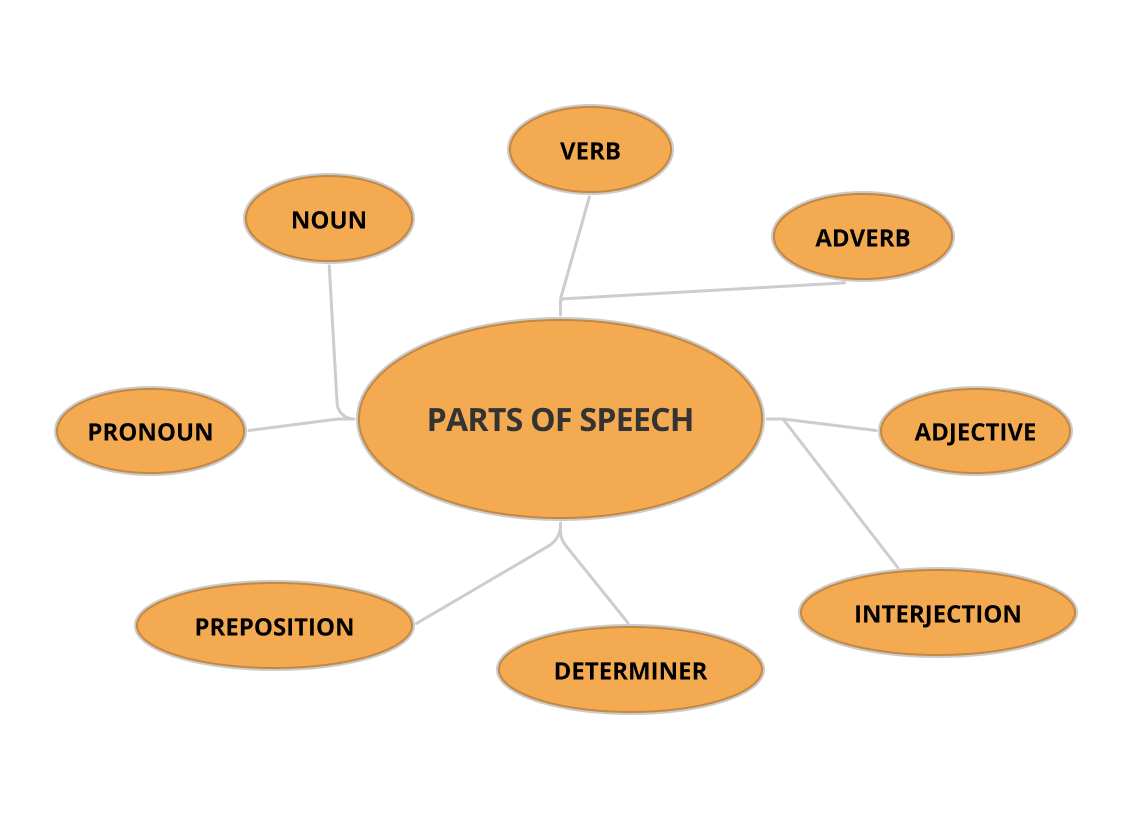

Corpora are frequently enriched with annotations containing linguistic information. Typically, texts within corpora undergo Part of Speech (POS) tagging, wherein the grammatical category of each word is identified. Beyond POS tagging, corpora can be further enhanced with annotations covering syntactic structure, semantic roles (denoting the roles played by each noun argument in a sentence, such as agent, patient, instrument, etc.), Named Entity Recognition (NER), sentiment analysis, and more.To illustrate, the Penn Treebank project annotates a collection of Wall Street Journal articles with both syntactic dependency information and POS tagging. Another type of syntactic corpus might be the Google Books Syntactic N-grams dataset, comprising syntactic tree fragments automatically extracted from the Google English Books collection. Some corpora are annotated with semantic information. The Proposition Bank, for example, provides a collection of English sentences that are manually annotated with various semantic roles. Some corpora also feature Named Entity Recognition. For example, GENETAG is a corpus of 20,000 sentences from the MEDLINE database annotated with gene/protein NER.

Corpora are frequently enriched with annotations containing linguistic information. Typically, texts within corpora undergo Part of Speech (POS) tagging, wherein the grammatical category of each word is identified. Beyond POS tagging, corpora can be further enhanced with annotations covering syntactic structure, semantic roles (denoting the roles played by each noun argument in a sentence, such as agent, patient, instrument, etc.), Named Entity Recognition (NER), sentiment analysis, and more.To illustrate, the Penn Treebank project annotates a collection of Wall Street Journal articles with both syntactic dependency information and POS tagging. Another type of syntactic corpus might be the Google Books Syntactic N-grams dataset, comprising syntactic tree fragments automatically extracted from the Google English Books collection. Some corpora are annotated with semantic information. The Proposition Bank, for example, provides a collection of English sentences that are manually annotated with various semantic roles. Some corpora also feature Named Entity Recognition. For example, GENETAG is a corpus of 20,000 sentences from the MEDLINE database annotated with gene/protein NER.

Use of Corpora in NLP

Corpora have traditionally served as tools to investigate specific aspects of language use, including collocation, frequency, preferred constructions, patterns of errors, and more. With the emergence of computer technology and machine learning algorithms, they have evolved into essential resources for model training and validation. Large Language Models are now trained on vast amounts of language data. For instance, OpenAI’s GPT-3 175B model is trained with 300 billion tokens gathered from sources such as Common Crawl, WebText2, and Wikipedia. Meta’s LLaMa 65B model, on the other hand, is trained with 1.4 trillion tokens sourced from various platforms, including English CommonCrawl, C4, Github, Wikipedia, ArXiv, and Stack Exchange. In our PolyAnalyst, diverse corpora like Open American National Corpus, the Penn Treebank, CoNLL Corpus, Europarl, the Russian National Corpus, and Wikipedia are employed for various modules. The utilization of large language datasets is poised to expand further in the future, making the adept handling of such data a crucial skill in the field of Natural Language Processing.