There are many reasons for the explosion of machine learning advancements over the past decade. We now have vastly improved hardware for fast computation, and memory is cheaper than ever. Data is now “Big Data,” and it is both jealously hoarded and publicly available in repositories such as ImageNet. Individually, these advancements are already a blessing for the technology-space. But for artificial intelligence (AI), they have opened the gates for something truly powerful—Neural Networks.

Neural Whatnow?

Neural Networks. You’ve probably heard of them. They are at the forefront of the machine learning craze and are the driver of many of the most impressive advancements. The technology that led machines to the best humans in Go and the popular video game Starcraft? Neural Networks. The backbone of algorithms that can recognize images and faces, which are igniting a surveillance and privacy panic? Neural Networks.

And yet, Neural Networks aren’t some new idea spawned from the incubator of a giant tech company. They aren’t some stroke of genius from a college student turned dropout who went on to found a revolutionary tech firm. Neural Networks are in fact… old hat. Or they were.

The concept of a Neural Network (something we will get to later) has been around for years. They date back to the 1970s, and simpler versions of them existed even in the 1940s! So, if they have existed for decades, why are they only popular now?

The answer is related to the hardware and data advancements mentioned earlier. Neural Networks crunch a lot of numbers. They also need a lot of data to help them learn. Up until the past decade, this made training anything but the simplest networks highly time-consuming and expensive.

With all the great improvements to hardware over the years, the possibility of using more advanced Neural Networks became possible. Aided by hardware demands from the entertainment industry and now cryptominers, GPUs (graphical processing units) have been developed which can calculate specific mathematical operations at lightning speed. Luckily, these same kinds of operations occur in training neural networks. Using the technology originally developed for beautiful visuals in film and video games helps us train networks at a fraction of the time it takes a traditional CPU.

What’s in a Name?

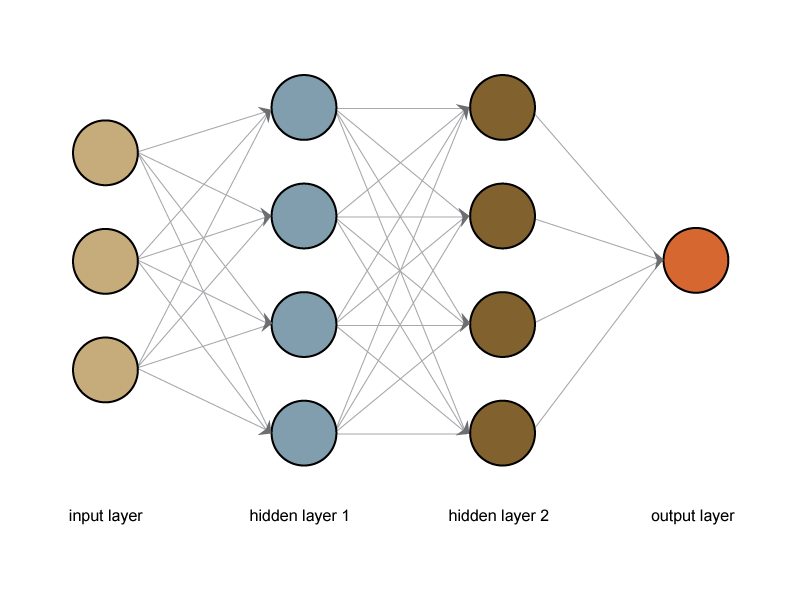

The power of Neural Networks may be evident, but at this point, one may also be wondering what they are in the first place. The name gives some clues. A Neural Network isn’t a singular object that makes decisions by itself. It is, as implied, a network of smaller objects all connected. A network of what, then? We call them Neurons. Neurons as in the cells inside our brains? Yes! Well, no. But sort of!

Neurons in Neural Networks can be thought of as being like the neurons in brains. They are tiny, individual units that are connected to other neurons in a large, structured network. These connections allow tiny pieces of data to flow between them. In our brains, these are electrical pulses. In the Neural Network, we send numbers between Neurons. The Neurons then take all the numbers fed to them by the Neurons they are connected to and process them. The process isn’t complicated—in fact, it is painfully trivial. After all, it is just a tiny unit—a single cell in our brain. But it then sends the processed information out to other Neurons it is connected to. Another number. Another electrical pulse. And so on. Tiny Neurons are fed tiny pieces of information, perform tiny pieces of computation on that snippet, and feed it forward to other Neurons, which do the same over and over until we eventually reach a final set of Neurons—the output of which is our final result.

From the collective effort of many small individual units networked together in a perfectly calibrated balance, we can achieve enormous computational power. The Whole is greater than the Sum of its parts.

Balancing Act

If that all sounded magical and farfetched, then don’t worry—it is. How exactly are we supposed to arrange these Neurons in such a perfect balance that their combined minuscule computations lead to a machine recognizing human faces? We can’t. So how do we get this to work? Well, we are talking about Machine Learning after all. And Machine Learning is how we are going to solve this. We aren’t going to calibrate the Neurons to be in balance. They are going to calibrate themselves.

We aren’t going to calibrate the Neurons to be in balance. They are going to calibrate themselves.

In order to achieve this, we need training data. We need data that is labeled with the desired output we want from the machine. We can provide this data to the unconfigured Neural Network. It will process the data and likely output something nonsensical and useless. But this is fine. We can use a mathematical function called Error. This Error is just a measurement of how different our output was from our desired targets. Then, using Calculus,we can discover how much of that Error is caused by the calibration of each one of our Neurons! Using this information, we can then tune the Neurons slightly and repeat the process—feed data into the Network, observe the output, calculate the Error, and use Calculus to know how to tune the Neurons to make them more accurate. This process continues over time until we have converged to a calibrated Network. This process of using Error functions, Calculus, and tuning is what Machine Learning is.

Power at a Price

Although it is conceptually simple (at least in this explanation that ignores the details), it is very resource intensive. At the moment I am fine tuning a Neural Network on my own desktop to recognize Western Art styles. Although the dataset is only a few GB, it takes almost an hour to run a single iteration of the training. It will take nearly two days to complete the 50-iteration learning schedule I planned. And even then, I may need to schedule another one if the Network still needs to learn more! And I’m not running this on some dusty machine I dragged out of the aughts. This is an almost brand-new desktop powered with a 3.7 GHz AMD Rhyzen 8-core processor with 32GB of RAM available and virtually no other load on the machine.

Neural Networks are expensive to train. If you want to increase performance, you could pay for an expensive GPU, but that might set you back nearly a thousand dollars. Companies and research institutions may have the funds to throw at this problem, but individuals, small companies, and small research groups may not. Luckily, they don’t need to anymore.

Cloud services have opened access to remote processing to provide other computing options to those desiring to train a Neural Network. Don’t have an expensive rig? No worries, just rent one remotely from Google at a fraction of the price.

Neurally Networked World

Neural Networks are here and they aren’t leaving. New advancements and computing architectures are constantly being published. Convolutional Networks are good at processing images and Recurrent Networks can handle variable sized data, streaming data, or sequential data. Neural Networks are powerful, indeed—far more so than other solutions we have. But they don’t mimic what is really occurring in our brains. There are some deep flaws even in our most advanced networks. For instance, it is extremely easy to confuse a Neural Network. A Network designed to recognize stop signs can be fooled by a few well-placed stickers on a sign. There are deep safety concerns if these are going to be used in self-driving cars for example.

Neural Networks are deeply dependent on the data used to train them (just as we discussed in a previous article). They are also dependent on how we configure their output. Most networks are designed to give a decision. For object detection, the network must return what it thinks the input image is. But what happens when we feed the network “nothing,” such as a completely blank image or fuzzy static noise? The network is forced to return something so it “sees,” say, a dog in the empty space. This is nonsense. Why would it choose one object over another in these cases where a human would just refuse to rigidly define nonsense? As another example, if we slightly alter an image by inserting some imperceptibly small random noise, we can completely trick a Neural Network. Where it before correctly thought that the image was a butterfly, it now thinks the image is a truck, while a human sees no difference in the images. This is bad, and it reflects deep issues with Neural Networks as we construct them today.

Neural Networks are occupying a liminal space. They are simultaneously scarily powerful and laughably simple and ignorant. They can best the human masters and yet be duped by the smallest of changes. We won’t be seeing Neural Networks achieve human-like sentience any time soon, and they aren’t ready for deployment in many other types of systems. But they are already being used in ways that should cause alarm.

China is already using facial recognition technology to tag members of the Uighur ethnic minority group. Accurate voice recognition ensures individuals could potentially be tracked even if they are not near a camera by turning the phones in our pockets into monitoring devices. “Deep Fakes” are a growing type of video that can modify existing videos to map one person’s face and voice over another’s to create a fake video that could be used for blackmail or disinformation. While Terminator remains science-fantasy, armed military drones using neural networks for stabilization, navigation, targeting, and tactics would revolutionize armed conflicts in ways impossible to predict.

Though neural networks are being used to oppress in some places they are also being used to save in others. Medical facilities increasingly deploy network systems to detect ailments such as cancer or infectious diseases. Laboratories can use similar networks for modeling complex biomolecules and developing treatments. Neural networks are even being used in traffic light control systems to increase vehicle flow and reduce accidents.

Where will Neural Networks take us next? It’s hard to say. But it seems evident that the world is caught and will remain in a web of Neurons.